Introduction to Properties of OLS Estimators

Linear regression models have several applications in real life. In econometrics, Ordinary Least Squares (OLS) method is widely used to estimate the parameters of a linear regression model. For the validity of OLS estimates, there are assumptions made while running linear regression models.

A1. The linear regression model is “linear in parameters.”

A2. There is a random sampling of observations.

A3. The conditional mean should be zero.

A4. There is no multi-collinearity (or perfect collinearity).

A5. Spherical errors: There is homoscedasticity and no auto-correlation

A6: Optional Assumption: Error terms should be normally distributed.

These assumptions are extremely important because violation of any of these assumptions would make OLS estimates unreliable and incorrect. Specifically, a violation would result in incorrect signs of OLS estimates, or the variance of OLS estimates would be unreliable, leading to confidence intervals that are too wide or too narrow.

This being said, it is necessary to investigate why OLS estimators and its assumptions gather so much focus. In this article, the properties of OLS model are discussed. First, the famous Gauss-Markov Theorem is outlined. Thereafter, a detailed description of the properties of the OLS model is described. In the end, the article briefly talks about the applications of the properties of OLS in econometrics.

The Gauss-Markov Theorem

The Gauss-Markov Theorem is named after Carl Friedrich Gauss and Andrey Markov.

Let the regression model be: Y={ \beta }_{ o }+{ \beta }_{ i }{ X }_{ i }+\varepsilon

Let { \beta }_{ o } and { \beta }_{ i } be the OLS estimators of { \beta }_{ o } and { \beta }_{ o }

According to the Gauss-Markov Theorem, under the assumptions A1 to A5 of the linear regression model, the OLS estimators { \beta }_{ o } and { \beta }_{ i } are the Best Linear Unbiased Estimators (BLUE) of { \beta }_{ o } and { \beta }_{ i }.

In other words, the OLS estimators { \beta }_{ o } and { \beta }_{ i } have the minimum variance of all linear and unbiased estimators of { \beta }_{ o } and { \beta }_{ i }. BLUE summarizes the properties of OLS regression. These properties of OLS in econometrics are extremely important, thus making OLS estimators one of the strongest and most widely used estimators for unknown parameters. This theorem tells that one should use OLS estimators not only because it is unbiased but also because it has minimum variance among the class of all linear and unbiased estimators.

Properties of OLS Regression Estimators in Detail

Property 1: Linear

This property is more concerned with the estimator rather than the original equation that is being estimated. In assumption A1, the focus was that the linear regression should be “linear in parameters.” However, the linear property of OLS estimator means that OLS belongs to that class of estimators, which are linear in Y, the dependent variable. Note that OLS estimators are linear only with respect to the dependent variable and not necessarily with respect to the independent variables. The linear property of OLS estimators doesn’t depend only on assumption A1 but on all assumptions A1 to A5.

Property 2: Unbiasedness

If you look at the regression equation, you will find an error term associated with the regression equation that is estimated. This makes the dependent variable also random. If an estimator uses the dependent variable, then that estimator would also be a random number. Therefore, before describing what unbiasedness is, it is important to mention that unbiasedness property is a property of the estimator and not of any sample.

Unbiasedness is one of the most desirable properties of any estimator. The estimator should ideally be an unbiased estimator of true parameter/population values.

Consider a simple example: Suppose there is a population of size 1000, and you are taking out samples of 50 from this population to estimate the population parameters. Every time you take a sample, it will have the different set of 50 observations and, hence, you would estimate different values of { \beta }_{ o } and { \beta }_{ i }. The unbiasedness property of OLS method says that when you take out samples of 50 repeatedly, then after some repeated attempts, you would find that the average of all the { \beta }_{ o } and { \beta }_{ i } from the samples will equal to the actual (or the population) values of { \beta }_{ o } and { \beta }_{ i }.

Mathematically,

E(bo) = βo

E(bi) = βi

Here, ‘E’ is the expectation operator.

In layman’s term, if you take out several samples, keep recording the values of the estimates, and then take an average, you will get very close to the correct population value. If your estimator is biased, then the average will not equal the true parameter value in the population.

The unbiasedness property of OLS in Econometrics is the basic minimum requirement to be satisfied by any estimator. However, it is not sufficient for the reason that most times in real-life applications, you will not have the luxury of taking out repeated samples. In fact, only one sample will be available in most cases.

Property 3: Best: Minimum Variance

First, let us look at what efficient estimators are. The efficient property of any estimator says that the estimator is the minimum variance unbiased estimator. Therefore, if you take all the unbiased estimators of the unknown population parameter, the estimator will have the least variance. The estimator that has less variance will have individual data points closer to the mean. As a result, they will be more likely to give better and accurate results than other estimators having higher variance. In short:

- If the estimator is unbiased but doesn’t have the least variance – it’s not the best!

- If the estimator has the least variance but is biased – it’s again not the best!

- If the estimator is both unbiased and has the least variance – it’s the best estimator.

Now, talking about OLS, OLS estimators have the least variance among the class of all linear unbiased estimators. So, this property of OLS regression is less strict than efficiency property. Efficiency property says least variance among all unbiased estimators, and OLS estimators have the least variance among all linear and unbiased estimators.

Just denoting mathematically,

Let bobe the OLS estimator, which is linear and unbiased. Let { b }_{ o } \ast be any other estimator of { \beta }_{ o }, which is also linear and unbiased. Then,

Var\left( { b }_{ o } \right) <Var\left( { b }_{ o } \ast \right)

Let { b }_{ i } be the OLS estimator, which is linear and unbiased. Let { b }_{ i }\ast be any other estimator of { \beta}_{ i }, which is also linear and unbiased. Then,

Var\left( { b }_{ i } \right) <Var\left( { b }_{ i }\ast \right)

The above three properties of OLS model makes OLS estimators BLUE as mentioned in the Gauss-Markov theorem.

It is worth spending time on some other estimators’ properties of OLS in econometrics. The properties of OLS described below are asymptotic properties of OLS estimators. So far, finite sample properties of OLS regression were discussed. These properties tried to study the behavior of the OLS estimator under the assumption that you can have several samples and, hence, several estimators of the same unknown population parameter. In short, the properties were that the average of these estimators in different samples should be equal to the true population parameter (unbiasedness), or the average distance to the true parameter value should be the least (efficient). However, in real life, you will often have just one sample. Hence, asymptotic properties of OLS model are discussed, which studies how OLS estimators behave as sample size increases. Keep in mind that sample size should be large.

Property 4: Asymptotic Unbiasedness

This property of OLS says that as the sample size increases, the biasedness of OLS estimators disappears.

Property 5: Consistency

An estimator is said to be consistent if its value approaches the actual, true parameter (population) value as the sample size increases. An estimator is consistent if it satisfies two conditions:

a. It is asymptotically unbiased

b. Its variance converges to 0 as the sample size increases.

Both these hold true for OLS estimators and, hence, they are consistent estimators. For an estimator to be useful, consistency is the minimum basic requirement. Since there may be several such estimators, asymptotic efficiency also is considered. Asymptotic efficiency is the sufficient condition that makes OLS estimators the best estimators.

Applications and How it Relates to Study of Econometrics

OLS estimators, because of such desirable properties discussed above, are widely used and find several applications in real life.

Example: Consider a bank that wants to predict the exposure of a customer at default. The bank can take the exposure at default to be the dependent variable and several independent variables like customer level characteristics, credit history, type of loan, mortgage, etc. The bank can simply run OLS regression and obtain the estimates to see which factors are important in determining the exposure at default of a customer. OLS estimators are easy to use and understand. They are also available in various statistical software packages and can be used extensively.

OLS regressions form the building blocks of econometrics. Any econometrics class will start with the assumption of OLS regressions. It is one of the favorite interview questions for jobs and university admissions. Based on the building blocks of OLS, and relaxing the assumptions, several different models have come up like GLM (generalized linear models), general linear models, heteroscedastic models, multi-level regression models, etc.

Research in Economics and Finance are highly driven by Econometrics. OLS is the building block of Econometrics. However, in real life, there are issues, like reverse causality, which render OLS irrelevant or not appropriate. However, OLS can still be used to investigate the issues that exist in cross-sectional data. Even if OLS method cannot be used for regression, OLS is used to find out the problems, the issues, and the potential fixes.

Conclusion

To conclude, linear regression is important and widely used, and OLS estimation technique is the most prevalent. In this article, the properties of OLS estimators were discussed because it is the most widely used estimation technique. OLS estimators are BLUE (i.e. they are linear, unbiased and have the least variance among the class of all linear and unbiased estimators). Amidst all this, one should not forget the Gauss-Markov Theorem (i.e. the estimators of OLS model are BLUE) holds only if the assumptions of OLS are satisfied. Each assumption that is made while studying OLS adds restrictions to the model, but at the same time, also allows to make stronger statements regarding OLS. So, whenever you are planning to use a linear regression model using OLS, always check for the OLS assumptions. If the OLS assumptions are satisfied, then life becomes simpler, for you can directly use OLS for the best results – thanks to the Gauss-Markov theorem!

Have we answered all your questions? Let us know how we are doing!

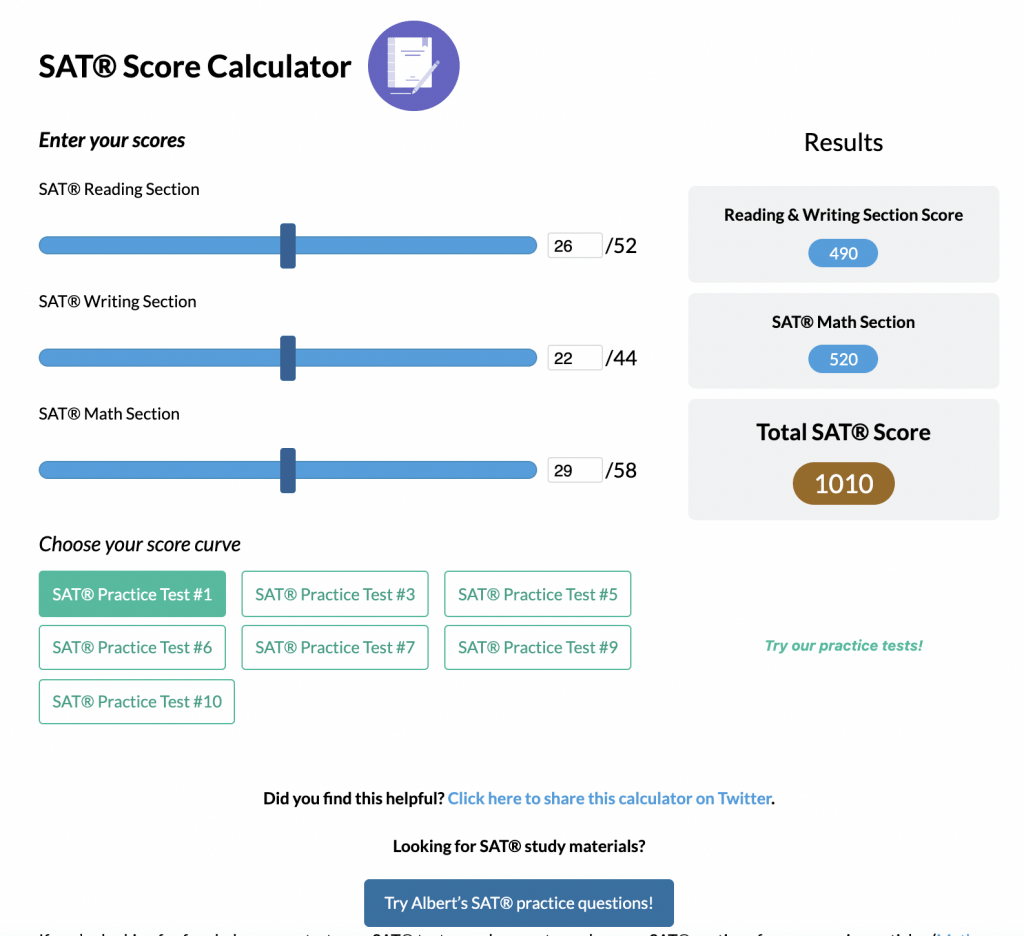

Looking for Econometrics practice?

Kickstart your Econometrics prep with Albert. Start your Econometrics exam prep today.